In a data-driven economy, your database is more than a simple storage repository; it is the operational core of your business. A poorly managed database directly translates to sluggish application performance, critical security vulnerabilities, and expensive downtime, all of which erode customer trust and impact your bottom line. For small businesses in Omaha and growing e-commerce retailers alike, overlooking the health of your data infrastructure is a risk you cannot afford. This guide moves beyond abstract theory to provide a definitive list of essential database management best practices.

We will deliver a clear, actionable framework covering everything from foundational security protocols and performance tuning to comprehensive backup and recovery strategies. Each point is designed to be implemented immediately, offering practical steps to strengthen your data layer. By mastering these core principles, you can transform your database from a potential operational bottleneck into a powerful, reliable, and scalable asset. This article provides the blueprint to ensure your data infrastructure not only supports your current operations but is also primed for future growth and innovation. You will learn how to build a resilient system that secures your data, optimizes performance, and provides a solid foundation for your digital strategy.

1. Database Normalization

Database normalization is a foundational technique in database management best practices, pioneered by Edgar F. Codd. It involves structuring a relational database to minimize data redundancy and enhance data integrity. This process organizes columns and tables to ensure that data dependencies are logical, reducing the risk of data anomalies during updates, insertions, or deletions.

For instance, an e-commerce platform would separate customer, order, and product data into distinct tables. This ensures a customer's address is stored only once, not repeated for every order they place, preventing inconsistencies if their address changes.

Core Principles and Implementation

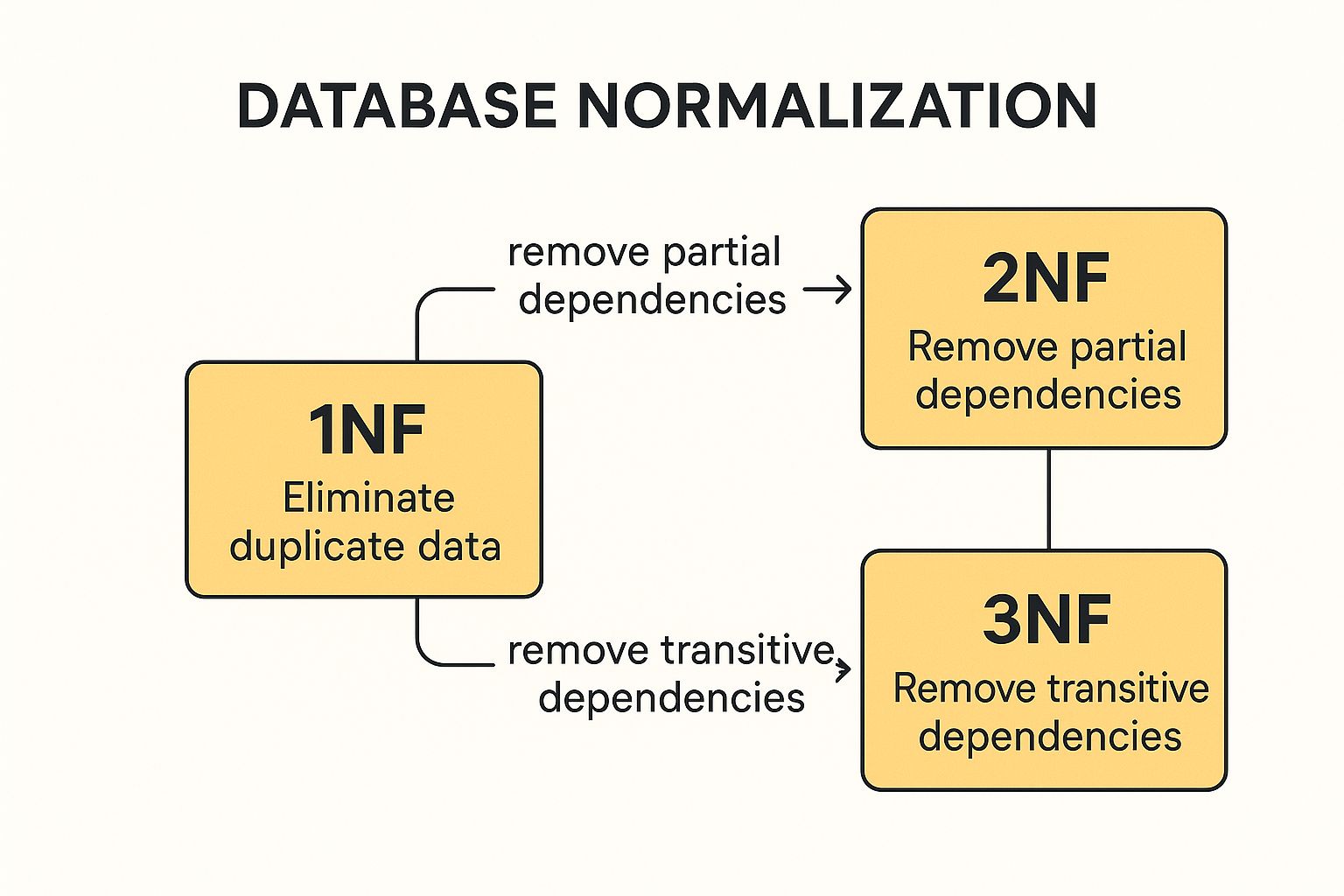

Normalization follows a series of guidelines called normal forms (NF). While several exist, most applications achieve optimal structure by adhering to the first three:

- First Normal Form (1NF): Ensures that table cells hold single values and each record is unique, typically by establishing a primary key.

- Second Normal Form (2NF): Builds on 1NF and requires that all non-key attributes are fully dependent on the entire primary key, eliminating partial dependencies.

- Third Normal Form (3NF): Extends 2NF by removing transitive dependencies, meaning non-key attributes cannot depend on other non-key attributes.

This concept map illustrates the progression through these fundamental normal forms.

The visualization highlights how each normal form builds upon the last to create a more efficient and logically sound database structure. For most business applications, achieving Third Normal Form (3NF) provides an excellent balance between data integrity and performance. While higher forms exist, they are often reserved for complex academic or scientific databases. However, in scenarios with heavy read traffic, like analytics dashboards, controlled denormalization might be used to improve query speed by intentionally re-introducing some redundancy.

2. Regular Database Backups

Regular database backups are a critical component of any robust data management strategy, involving the systematic creation and storage of copies of your data and its structure. This practice is the ultimate safety net, ensuring business continuity by enabling data recovery after events like hardware failure, data corruption, human error, or cyberattacks. Without a reliable backup plan, a single incident could lead to catastrophic and permanent data loss.

For example, a financial institution might use real-time transaction log backups to meet regulatory compliance and ensure no financial data is lost. Similarly, an e-commerce site relies on frequent backups to quickly restore operations and customer data after a server crash, minimizing revenue loss and reputational damage.

Core Principles and Implementation

A successful backup strategy is more than just copying files; it requires a structured approach that balances recovery time objectives (RTO) and recovery point objectives (RPO). Key strategies include:

- Full Backups: A complete copy of the entire database. While comprehensive, they are resource-intensive.

- Differential Backups: Copies all data that has changed since the last full backup. Faster to create than a full backup and requires the last full backup plus the latest differential for restoration.

- Incremental Backups: Copies only the data that has changed since the last backup of any type. These are the fastest to create but require a more complex restoration process.

Adhering to the 3-2-1 rule is a cornerstone of database management best practices: maintain at least three copies of your data, store them on two different types of media, and keep one copy off-site. Regularly testing your restoration procedures is just as important as creating the backups, as it verifies their integrity and ensures your team can execute a recovery when needed. Automating this entire process minimizes the risk of human error and guarantees consistency.

3. Database Indexing Strategy

A well-defined database indexing strategy is a critical database management best practice for accelerating data retrieval operations. An index acts like a lookup table for your database, allowing the query engine to find data without scanning every row in a table. This dramatically improves the performance of SELECT queries and WHERE clauses, which is essential for responsive applications.

For example, Amazon's product search relies heavily on sophisticated indexing to quickly filter through millions of items based on keywords, categories, and ratings. Similarly, financial trading platforms use indexes to provide real-time market data analysis, where query speed is paramount. These examples show how a smart indexing strategy directly impacts user experience and business operations.

Core Principles and Implementation

Implementing an effective indexing strategy involves understanding query patterns and the trade-offs between read and write performance. While indexes speed up reads, they can slightly slow down data modification operations (INSERT, UPDATE, DELETE) because the index also needs to be updated.

- Analyze Query Patterns: Use query analyzers to identify slow queries and index columns frequently used in

WHERE,JOIN, andORDER BYclauses. - Use Composite Indexes: For queries that filter on multiple columns, create a composite index. The order of columns in the index should match the query's filtering logic for optimal performance.

- Maintain Indexes: Regularly monitor index usage to identify and remove unused or redundant indexes, which consume storage and add unnecessary overhead to write operations.

- Consider Index Types: Different databases offer various index types (e.g., B-Tree, Hash, Full-text). Choose the type that best fits the data and query requirements.

A proactive approach to indexing is a cornerstone of performance tuning. For platforms like WordPress, a thoughtful indexing plan is one of the most effective WordPress database optimization techniques to ensure a fast and scalable website. Striking the right balance between read acceleration and write overhead is key to a high-performing database.

4. Database Security Implementation

Database security implementation is a critical component of database management best practices, encompassing a comprehensive strategy to protect data from unauthorized access, breaches, and malicious activities. This practice involves deploying multiple layers of defense, including robust authentication, granular authorization, data encryption, and continuous monitoring to ensure the confidentiality, integrity, and availability of information.

For example, a healthcare provider must implement stringent database security to comply with HIPAA, ensuring patient records are protected. Similarly, financial institutions rely on multi-factor authentication and encryption to secure sensitive customer data, while e-commerce platforms encrypt payment information to prevent fraud.

Core Principles and Implementation

A multi-faceted security model, pioneered by leaders like Oracle and Microsoft, is essential for creating a resilient database environment. Effective implementation relies on several key pillars:

- Principle of Least Privilege (PoLP): Grant users only the minimum permissions necessary to perform their job functions.

- SQL Injection Prevention: Use parameterized queries or prepared statements to neutralize one of the most common web application attack vectors.

- Regular Patching: Consistently update database software to protect against known vulnerabilities.

- Encryption: Encrypt sensitive data both at rest (on disk) and in transit (over the network) to make it unreadable if intercepted.

Implementing these measures is non-negotiable for any organization handling sensitive information. For a comprehensive approach to safeguarding your data, consider these proven data security best practices for remote teams that also apply broadly to database environments. This layered security approach not only protects against external threats but also mitigates risks from internal actors. Many of these database security measures are foundational to overall web application security best practices.

5. Performance Monitoring and Optimization

Performance monitoring and optimization are critical database management best practices that involve continuously tracking key metrics to identify and resolve system bottlenecks. This proactive approach ensures the database operates at peak efficiency, delivering fast, reliable access to data. By systematically analyzing performance, organizations can prevent slowdowns and failures before they impact users.

For example, a company like Pinterest must optimize query performance to handle billions of image searches daily, while Uber relies on real-time performance monitoring for its ride-matching databases. These systems require constant vigilance to maintain the responsiveness users expect.

Core Principles and Implementation

Effective performance management begins with establishing a baseline of normal operation and then continuously monitoring for deviations. This process is crucial for maintaining system health and planning for future growth.

- Establish a Baseline: Document key performance metrics like query response times, throughput, and CPU/memory usage during normal operations. This baseline serves as a benchmark for identifying performance degradation.

- Monitor Key Indicators: Continuously track critical metrics. A sudden spike in query latency or resource utilization can indicate a problem that needs immediate attention. Understanding broader software development key performance indicators can provide valuable context for these database-specific metrics.

- Analyze and Tune: Use tools like query execution plan analyzers to find inefficient queries. Optimizations may involve rewriting SQL, adding indexes, or reconfiguring server parameters.

This iterative cycle of monitoring, analyzing, and tuning is fundamental to sustaining a high-performing database. By implementing automated performance testing within CI/CD pipelines, teams can catch performance regressions before they reach production. Creating shared performance dashboards also provides stakeholders with clear visibility into system health, aligning technical efforts with business objectives and ensuring the database scales effectively with user demand.

6. Database Documentation and Change Management

Database documentation and change management are critical disciplines for maintaining database integrity, stability, and collaboration. This practice involves creating a systematic record of the database schema, stored procedures, and configurations, paired with a formal process for tracking, approving, and implementing changes. This structured approach, advocated by thought leaders like Martin Fowler, ensures that database evolution is deliberate and reversible.

For example, a company like Atlassian uses its own tool, Confluence, to maintain comprehensive, living documentation for its databases. This allows development teams to understand data structures and dependencies, preventing unintended consequences when deploying new application features that interact with the database.

Core Principles and Implementation

Effective documentation and change management prevent knowledge silos and reduce the risk associated with database modifications. A well-defined process provides a clear audit trail and simplifies onboarding for new team members. Key implementation steps include:

- Version Control for Schema: Treat your database schema like application code by using tools like Liquibase or Flyway to version control changes. This enables automated, repeatable deployments and easy rollbacks.

- Documentation Templates: Create and enforce standardized templates for documenting tables, views, and stored procedures. Include descriptions of columns, data types, relationships, and business logic.

- Peer Review Process: Implement a mandatory peer review or approval workflow for all database schema changes. This ensures that modifications are vetted for performance, security, and adherence to standards before they reach production.

This methodical approach is fundamental to agile database techniques, where the database must evolve rapidly alongside the application. Integrating these practices into the development lifecycle ensures that the database remains a reliable and well-understood asset rather than a mysterious black box. This is a core tenet of database management best practices, ensuring long-term system health and scalability. A controlled change process significantly minimizes downtime and data corruption risks.

7. Data Quality and Integrity Enforcement

Data quality and integrity enforcement is a critical database management best practice focused on maintaining the accuracy, consistency, and reliability of data. This discipline involves implementing a set of rules and procedures directly within the database to prevent invalid or corrupt data from being stored. By enforcing these standards at the source, organizations can trust their data for decision-making, reporting, and operations.

For example, a banking application enforces integrity by using a CHECK constraint to ensure an account balance never drops below zero. Similarly, a healthcare system uses foreign key constraints to maintain referential integrity, ensuring patient records are correctly linked to their corresponding medical histories and appointments.

Core Principles and Implementation

Effective data integrity relies on leveraging database features to automate and enforce business rules, which is far more reliable than relying solely on application-level logic. The primary tools for this are constraints, triggers, and validation routines.

- Define Constraints at the Database Level: Use

NOT NULL,UNIQUE,PRIMARY KEY,FOREIGN KEY, andCHECKconstraints to enforce fundamental data rules directly within the table structure. - Implement Data Validation: For data entering the system via bulk imports or integrations, run validation procedures to identify and cleanse inaccuracies before they contaminate the database.

- Use Triggers for Complex Rules: When business logic is too complex for simple constraints, use database triggers to execute custom validation checks automatically during data modification events (

INSERT,UPDATE,DELETE). - Conduct Regular Data Audits: Schedule and perform routine data quality audits to identify inconsistencies that may have bypassed initial checks. These audits are essential for long-term data health.

Implementing these measures ensures that the database remains a reliable source of truth. Proactive enforcement prevents the costly and time-consuming process of correcting widespread data corruption, making it a cornerstone of robust database management best practices. This approach guarantees that data adheres to business standards regardless of the application or user interacting with it.

8. Scalability Planning and Architecture

Scalability planning is a critical database management best practice that involves designing systems to accommodate growth in data volume, user traffic, and transactional load. This forward-thinking approach ensures that as a business expands, its database can grow with it without performance degradation or costly, disruptive overhauls. A scalable architecture is built to handle increased demand efficiently.

For example, Netflix’s distributed database strategy allows it to serve content seamlessly to a global user base by spreading data across multiple geographic regions. Similarly, Airbnb scales its database architecture to manage millions of property listings and bookings worldwide, ensuring a responsive user experience even during peak travel seasons.

Core Principles and Implementation

Effective scalability is achieved through several well-established strategies. The choice between them depends on the specific application needs, budget, and existing infrastructure. The primary approaches include:

- Vertical Scaling (Scaling Up): Involves adding more power (CPU, RAM) to an existing server. This is often simpler to implement but has a physical limit and can become expensive.

- Horizontal Scaling (Scaling Out): Involves adding more servers to a database cluster. This approach, used in distributed systems, offers nearly limitless scalability and improved fault tolerance.

- Partitioning/Sharding: Divides a large database into smaller, more manageable pieces (shards). Each shard can be hosted on a separate server, distributing the load and improving query performance.

Proactive planning is the cornerstone of a scalable system. For most modern applications, a horizontal scaling strategy combined with sharding offers the best long-term flexibility and resilience. This architecture prevents a single server from becoming a bottleneck and supports geographic distribution for lower latency. However, it requires more complex management. The key is to monitor growth patterns continuously and implement caching strategies to offload read requests, reducing the direct load on the database and extending its capacity.

9. Disaster Recovery and High Availability

Disaster Recovery (DR) and High Availability (HA) are critical database management best practices that ensure continuous operation and data protection. High Availability focuses on preventing downtime through redundancy, using technologies like clustering and automated failover, while Disaster Recovery provides a plan to restore operations after a catastrophic event, such as a natural disaster or major hardware failure.

For example, global financial institutions use real-time, geographically distributed database replication. This ensures that if one data center goes offline, another can take over instantly, maintaining service continuity and meeting strict regulatory compliance requirements for data availability.

Core Principles and Implementation

Implementing a robust DR/HA strategy requires a clear understanding of business needs, defined by two key metrics: Recovery Time Objective (RTO) and Recovery Point Objective (RPO). RTO dictates the maximum acceptable downtime, while RPO defines the maximum tolerable data loss.

- Define Clear Objectives: Establish RTO and RTO values based on business impact analysis. A critical e-commerce site may need an RTO of minutes, while an internal reporting system might tolerate hours.

- Implement Automated Failover: Use technologies like clustering or database mirroring to automatically switch to a standby server if the primary one fails, minimizing manual intervention and downtime.

- Regularly Test Procedures: Routinely conduct failover tests and full disaster recovery drills to validate your plan, identify weaknesses, and ensure your team is prepared to execute it.

- Use Geographic Distribution: Host replicas or backups in different geographic regions to protect against localized disasters like fires, floods, or power outages.

A well-architected HA/DR plan is fundamental to maintaining business continuity and customer trust. These strategies are not just for large enterprises; with cloud services, even small businesses can implement sophisticated solutions. Ensuring your data layer is resilient is as crucial as the application itself, a principle that aligns with the best practices for web development. You can learn more about holistic system design on upnorthmedia.co to see how data resilience fits into the broader picture.

Best Practices Comparison Matrix for Database Management

| Item | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Database Normalization | Moderate to High – requires design expertise and careful planning | Moderate – database design tools recommended | Reduced redundancy, improved data integrity, easier maintenance | Structured data systems like e-commerce, banking, healthcare | Maintains consistency, reduces storage, prevents anomalies |

| Regular Database Backups | Low to Moderate – setup automated schedules and retention policies | High – needs storage and infrastructure | Reliable data recovery, minimal downtime, regulatory compliance | Any system requiring data protection and quick recovery | Protects data, supports compliance, enables auditing |

| Database Indexing Strategy | Moderate – requires analysis of queries and ongoing maintenance | Moderate – increased storage and maintenance overhead | Greatly improved query performance and faster data retrieval | High-read environments like search engines, trading platforms | Dramatically speeds up reads, supports complex queries |

| Database Security Implementation | High – involves multiple security layers and continuous updates | Moderate to High – encryption and monitoring add overhead | Prevents unauthorized access and data breaches, ensures compliance | Sensitive data environments like healthcare, finance, e-commerce | Protects data, ensures compliance, builds trust |

| Performance Monitoring and Optimization | Moderate to High – needs specialized expertise and infrastructure | Moderate – monitoring tools may consume resources | Early detection of bottlenecks, optimized performance | Large-scale applications with critical uptime needs | Proactive optimization, reduces downtime, data-driven decisions |

| Database Documentation and Change Management | Moderate – requires process discipline and ongoing updates | Low to Moderate – tools for versioning and diagrams | Improved collaboration, error reduction, faster troubleshooting | Teams with evolving schemas and collaborative development | Facilitates knowledge transfer, controls changes, supports audits |

| Data Quality and Integrity Enforcement | Moderate – defining constraints and validations | Low to Moderate – slight impact on performance | Higher data accuracy, automatic enforcement of business rules | Systems requiring strict data correctness like banking, healthcare | Ensures accuracy, prevents invalid data, enforces rules |

| Scalability Planning and Architecture | High – involves complex design and architecture changes | High – infrastructure and management intensive | Handles growth in data and users, maintains performance | Large-scale, globally distributed systems | Supports growth, improves response times, flexible architecture |

| Disaster Recovery and High Availability | High – complex infrastructure and continuous testing required | High – investment in replication and failover systems | Minimized downtime, fast recovery, business continuity | Mission-critical systems with zero tolerance for downtime | Reduces disruption, protects data, ensures continuous ops |

Build a Resilient Data Foundation for the Future

Navigating the complexities of data is no longer a task reserved for enterprise-level IT departments. For any business with a digital presence, from Omaha-based retailers to national content platforms, the database is the central nervous system of your operations. The strategies detailed in this guide are not merely technical items on a checklist; they are the fundamental building blocks of a resilient, high-performance digital infrastructure.

Adopting these database management best practices is an ongoing commitment, not a one-time project. It's about cultivating a proactive mindset where data integrity, security, and performance are integral to your daily operations. Each practice we've explored, from meticulous normalization and strategic indexing to robust security protocols and comprehensive disaster recovery plans, contributes to a greater whole. Together, they create a data foundation that is not only stable and reliable but also agile and ready to adapt to future challenges and opportunities.

Key Takeaways for Immediate Action

To transform these concepts into tangible results, focus on these critical pillars:

- Security First, Always: Implement the principle of least privilege, encrypt sensitive data, and conduct regular security audits. A data breach can erode customer trust and incur significant financial penalties, making proactive security a non-negotiable priority.

- Performance is a Feature: Your users experience your database through application speed and responsiveness. Consistent performance monitoring, query optimization, and a smart indexing strategy directly impact user satisfaction, conversion rates, and search engine rankings.

- Prepare for the Unexpected: Regular, verified backups and a well-documented disaster recovery plan are your ultimate safety net. Don't wait for a crisis to discover a flaw in your recovery process. Test your backups and recovery procedures regularly to ensure business continuity.

- Plan for Growth: Scalability isn't an afterthought. Whether you're choosing a database architecture or planning your indexing strategy, always consider how your decisions will support future growth in data volume and user traffic.

The True Value of a Well-Managed Database

Ultimately, mastering these database management best practices is about more than just preventing errors or speeding up queries. It's about unlocking the full potential of your most valuable asset: your data. A well-managed database empowers you to deliver exceptional user experiences, make informed business decisions with reliable data, and innovate with confidence. It transforms your data layer from a potential liability into a powerful competitive advantage that fuels growth, enhances operational efficiency, and builds a rock-solid foundation for whatever comes next.

Ready to transform your database from a simple storage unit into a strategic business asset? The experts at Up North Media specialize in building and optimizing high-performance database architectures for web applications. We can help you implement these best practices to ensure your digital infrastructure is secure, scalable, and lightning-fast. Contact us today for a free consultation and let's build a resilient data foundation for your future.