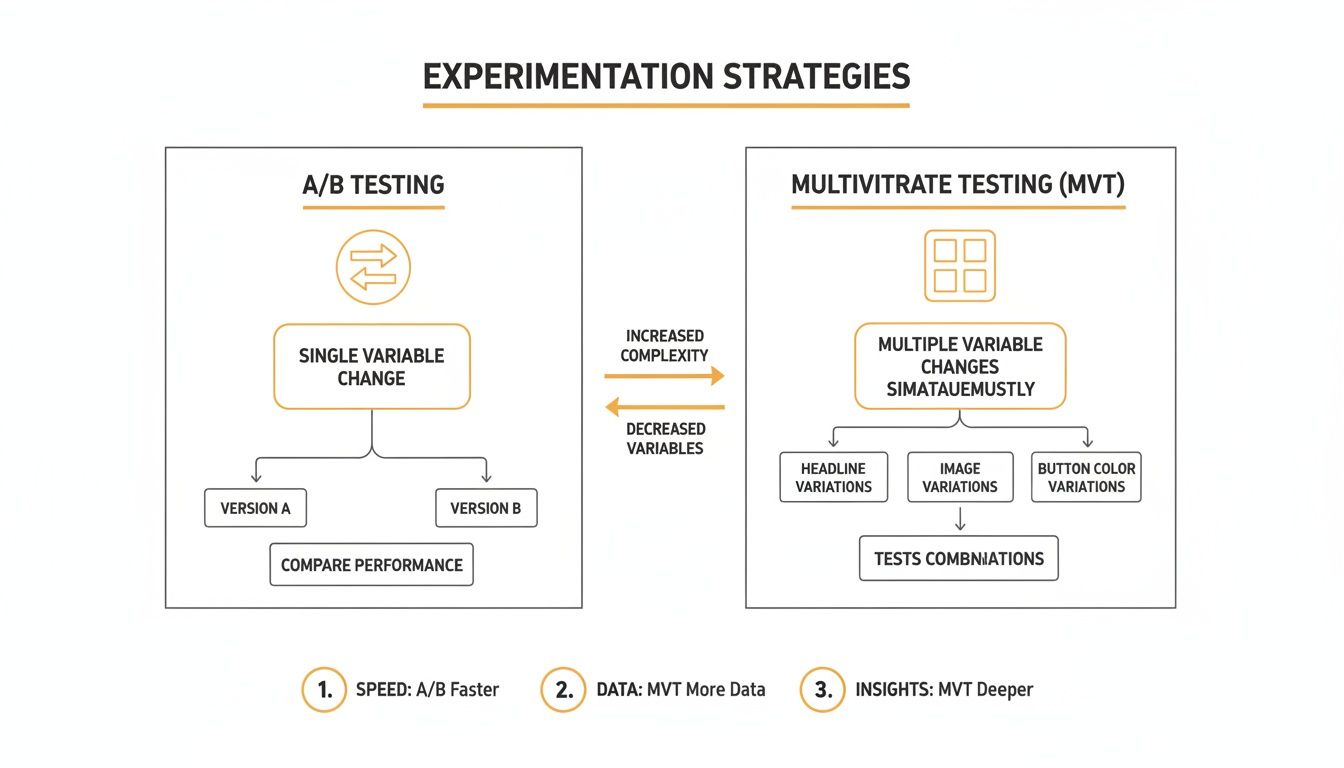

So you've probably heard of A/B testing. You know, testing a red button versus a green one to see which gets more clicks. It’s a solid strategy, but what if you could do more? What if you could find the absolute perfect combination of elements on a page—the headline, the image, and the button color—all at once?

That's where multivariate testing (MVT) comes in. It’s a method for testing multiple variations of several page elements at the same time to discover the single best-performing combination. It's less about "this or that" and more about finding the perfect mix.

Understanding the Perfect Recipe for Your Web Page

Think of your landing page like a recipe for chocolate chip cookies. An A/B test is like trying just one ingredient swap—say, brown sugar versus white sugar. It's useful, sure. You might find that brown sugar makes a better cookie.

But that approach doesn't tell you how brown sugar works with other ingredients, like dark chocolate chips versus milk chocolate chips. You're left guessing if the winning sugar would be even better with a different kind of chocolate.

Multivariate testing, on the other hand, is like being a master baker and testing every possible combination in one go:

- Batch 1: White Sugar + Milk Chocolate

- Batch 2: White Sugar + Dark Chocolate

- Batch 3: Brown Sugar + Milk Chocolate

- Batch 4: Brown Sugar + Dark Chocolate

This way, you don't just find the best sugar. You find the ultimate combination of ingredients that creates the tastiest cookie. MVT does the same for your webpage, revealing the perfect mix of headlines, images, and calls-to-action that drives the most conversions.

Before we go deeper, let's get a handle on the key terms you'll run into. This quick table breaks down the essentials.

Key Terms in Multivariate Testing at a Glance

| Term | Simple Definition | Example |

|---|---|---|

| Element | A part of the page you want to test. | A headline, an image, or a call-to-action button. |

| Variation | A different version of an element. | For a headline element, variations could be "Get Started Now" vs. "Sign Up Free." |

| Combination | A unique version of the page created by mixing one variation of each element. | Headline A + Image B + Button C. |

| Interaction Effect | When the impact of one element's variation changes because of another element's variation. | A red button might convert best with Image A, but a green button converts best with Image B. |

Getting these terms down makes it much easier to see how all the pieces of a multivariate test fit together.

The Power of Interaction Effects

The real magic of multivariate testing is its ability to uncover interaction effects. That's a fancy term for when the impact of one element changes based on what else is on the page. A bold headline might do okay on its own, but it could be a conversion machine when paired with a specific hero image.

The goal of MVT isn't just to find the best version of a single element. It’s about finding the best-performing combination of elements. It moves beyond a simple "this vs. that" mindset to reveal the synergy that really drives results.

The image below gives you a great visual of this. It shows how different elements—like a headline, sub-headline, and body copy—are all mixed and matched to create several unique experiences that are tested all at once.

This process shows you exactly how much each variable contributes to the page's success, giving you some incredibly deep, actionable insights.

A Concept with Deep Roots

While it feels like a modern marketing trick, the statistical ideas behind MVT have been around for centuries. The core principles were actually laid out way back in 1776.

One of the coolest early examples comes from the British Royal Navy in the 1700s. Surgeons were desperately trying to figure out how to stop sailors from getting scurvy on long voyages. They tested combinations of treatments and discovered that citrus fruits had a massive impact, especially when combined with other dietary changes. It was a breakthrough that practically wiped out the disease at sea.

At its heart, that’s what MVT is all about: finding the winning formula. For businesses today, it’s a powerful way to truly Optimize the User Experience to Boost Conversions and make strategic decisions backed by real data.

Choosing Between Multivariate and A/B Testing

Figuring out whether to run a multivariate or an A/B test can feel tricky, but it gets a lot simpler when you nail down your goal first. Think of it like a competition. A/B testing is a straight-up duel, like a boxing match. It pits one version of your page (the champ) against a totally different challenger to see which one comes out on top.

Multivariate testing (MVT), on the other hand, is more like a team chemistry experiment. Instead of one big fight, you’re the coach trying to figure out the absolute best lineup. You test how different players—your headline, your call-to-action (CTA) button, and that hero image—all work together to create the highest-scoring team. The whole point is to find the most powerful combination of elements.

When to Use A/B Testing

A/B testing is your go-to when you're making big, bold changes. It really shines when your hypothesis is about a single, high-impact element or you're rolling out a complete redesign.

You'll want to choose A/B testing when you need to:

- Test a Radical Redesign: If you're comparing a completely new landing page layout against the one you have now, an A/B test gives you a clear winner. No ambiguity.

- Make One Significant Change: Do you want to know if a video background converts better than a static image? That’s a simple "this vs. that" question, and it's perfect for an A/B test.

- Work with Lower Traffic: Since an A/B test only has to split your traffic between two versions, it can reach statistical significance way faster. This makes it the ideal choice for pages that don't get a flood of visitors.

A/B testing is basically a sprint. It’s built to get you a quick, definitive answer on a big change.

When to Use Multivariate Testing

Multivariate testing is where you turn when you want to refine a page that’s already doing pretty well. It helps you understand how multiple smaller elements interact with each other. It's an evolutionary approach, perfect for optimizing high-traffic pages where even subtle tweaks can lead to some serious gains.

Choose MVT when you want to:

- Optimize Multiple Elements: You have a decent landing page but have a hunch that the headline, CTA button color, and testimonial placement could all be working harder. MVT will show you the winning combination.

- Understand Interaction Effects: Do you want to know if that witty headline you wrote performs better with a professional headshot or a casual illustration? MVT is the only way to uncover these crucial relationships between elements.

- Refine High-Traffic Pages: MVT needs a lot of traffic because it has to split visitors among many different combinations. It's a perfect fit for homepages, pricing pages, or key landing pages that already pull in thousands of visitors.

The core difference is scope. A/B testing finds a winning page. Multivariate testing finds a winning combination of elements. This distinction is central to building effective conversion rate optimization strategies.

A Practical Decision Framework

Still on the fence? Let's break it down with a simple comparison to help guide your choice.

| Factor | Use A/B Testing If... | Use Multivariate Testing If... |

|---|---|---|

| Your Goal | You need to validate a single, bold idea or a complete redesign. | You want to fine-tune an existing page by optimizing multiple elements at once. |

| Page Traffic | You have low to moderate traffic and need results relatively quickly. | You have high traffic and can afford to split it among many variations. |

| Complexity | Your hypothesis is simple: "Does version A beat version B?" | Your hypothesis is complex: "Which combination of headline, image, and CTA performs best?" |

| Insights Needed | You need to know which page won. | You need to know why a combination won by seeing how elements influence each other. |

At the end of the day, both are powerful tools in your optimization toolkit. Understanding what is multivariate testing and how it differs from A/B testing lets you pick the right approach for your specific goals, traffic levels, and the questions you need answered. To go even deeper, you can explore various conversion rate optimization strategies that bring both of these methods together for maximum impact.

Understanding the Statistics That Power MVT

Diving into multivariate testing can feel like you’re about to get hit with a firehose of statistics, but trust me, the core ideas are simpler than they sound. You don’t need a Ph.D. to get what makes MVT tick. The whole thing is built on a few key concepts that ensure your results are actually trustworthy, not just random noise.

At its heart, MVT runs on a method called factorial design. The easiest way to think about this is like building a menu. Let’s say you’re testing two different headlines (Headline A, Headline B) and three different call-to-action buttons (Button 1, Button 2, Button 3). A factorial design maps out every possible combination, just like a restaurant menu lists every combo meal.

- Headline A + Button 1

- Headline A + Button 2

- Headline A + Button 3

- Headline B + Button 1

- Headline B + Button 2

- Headline B + Button 3

You end up with six unique versions of your page to test against each other. Factorial design is what lets MVT systematically test all these pairings at once, making sure you don't miss the single most powerful combination. This is a world away from the simple head-to-head matchup of A/B testing.

As you can see, A/B testing is a straight duel between two versions. MVT, on the other hand, is more like a tournament, exploring how multiple elements play together to create a winning experience.

The Rules of the Game: Statistical Significance and Confidence

Okay, so you’ve got all your combinations running. How do you know if a "winner" is the real deal or just got lucky? That’s where statistical significance comes in. It’s basically a confidence score that tells you the results of your test aren't a fluke.

Most pros in the optimization world won't make a call without a 95% confidence level. What that means is if you could run the exact same test 100 times, you’d see the same winner 95 of those times. It’s the industry gold standard for being able to say, "Yes, this change actually works."

Think of statistical significance as the referee in your experiment. It’s the official call that confirms a performance lift is real and repeatable, giving you the green light to roll out the winning combination across your site.

If you end a test with only 70% confidence, you're essentially making a business decision on a coin toss. That’s not a strategy; it’s a gamble. This is why patience is so important—you absolutely have to let a test run long enough to collect enough data.

Why MVT Is So Traffic Hungry

This brings us to the biggest hurdle in multivariate testing: it needs a ton of traffic. Since you’re splitting visitors among many different combinations, each version only gets a tiny slice of the audience.

To hit that critical 95% confidence level, every single combination needs to see enough visitors and conversions. This is what's known as achieving an adequate sample size. Without enough traffic, a test could drag on for months—or even years—to produce a reliable result. By then, your findings might be completely irrelevant.

And the traffic requirements are no joke. As a rough guideline, you often need at least 10,000 monthly visitors per variation for a test to have enough statistical power. Consider this: a site with 30,000 daily visitors and a 5% conversion rate could run a simple test with three variations and hit 95% confidence in about 11 days. But drop that to 5,000 daily visitors with a 2% conversion rate, and the same test could take a staggering 468 days. You can dig into more of these testing dynamics on Matomo.org.

Understanding this trade-off is crucial. While MVT offers incredibly deep insights, its appetite for traffic means it’s really only practical for high-volume pages. For most other situations, the focused approach of A/B testing is a much smarter bet.

How to Apply Multivariate Testing in the Real World

Theory is one thing, but seeing how multivariate testing actually drives business growth is where it really gets interesting. Once you move past the concepts, you can see how real companies use MVT to fine-tune their user experiences and get tangible results. This is where the magic of finding that perfect combination of elements turns into more revenue and better engagement.

Let's walk through a few concrete scenarios where MVT becomes an essential tool for optimization. These examples show how businesses in different industries pinpoint challenges and test specific combinations to find a winning formula.

Optimizing an E-commerce Product Page

For an online store, the product page is where browsers become buyers. Even tiny bits of friction in the user journey can lead to abandoned carts. Imagine an e-commerce shop wants to increase its "Add to Cart" rate—a critical first step in the sales funnel.

Instead of running separate A/B tests for every single idea, they decide to launch a multivariate test.

- Element 1 (Product Images): They test a professional studio photo against a lifestyle image showing the product in use.

- Element 2 (Pricing Display): They compare showing the full price ("$99") versus a "buy now, pay later" option ("4 payments of $24.75").

- Element 3 (Shipping Message): One variation is "Free Shipping on Orders Over $50," while the other is "Get it by Friday."

By testing all eight possible combinations, the retailer might discover that the lifestyle photo paired with the installment pricing and the "Get it by Friday" message boosts add-to-cart rates by 18%. This insight reveals that urgency and affordability, when combined with a relatable image, create the most powerful psychological trigger for their audience.

Refining a SaaS Pricing Page

Now, let's think about a SaaS company trying to get more sign-ups for its mid-tier plan. Their pricing page gets plenty of traffic, but conversion rates are flat. The team has a hunch that a mix of unclear feature descriptions and a weak call-to-action is the culprit.

They set up a multivariate test to find the best possible layout.

- Element 1 (Feature Descriptions): They test bullet points with technical specs against short, benefit-focused paragraphs.

- Element 2 (CTA Button Text): The variations are "Start Your Free Trial" versus "Choose Plan."

- Element 3 (Plan Layout): They compare a standard vertical layout against a horizontal one that highlights the recommended plan.

After running the test, the data shows that the benefit-focused paragraphs, when combined with the "Start Your Free Trial" CTA and the highlighted plan layout, increase sign-ups by 23%. MVT didn't just find the best button; it uncovered the ideal context that made users feel confident enough to commit. When you are looking to improve your conversion rates, it's essential to follow our detailed guide on landing page design best practices.

The true power of MVT is its ability to reveal synergies. Major brands have seen incredible results, with stats showing MVT uncovers a 22% average conversion uplift versus A/B testing's 12%. Expedia famously tested 32 combinations, finding that removing one unused field simplified their user flow and spiked revenue by a massive 32.7%. Discover more about these game-changing optimization findings on VWO.com.

MVT also plays a huge role in creating a high-converting lead magnet funnel, letting marketers systematically test and refine each step of the customer journey for the best performance. These real-world applications show that understanding what is multivariate testing is the first step toward unlocking deeper, more impactful optimization insights that drive genuine growth.

A Step-by-Step Guide to Your First MVT Campaign

Diving into your first multivariate test can feel like a lot, but if you break it down into simple, logical steps, it's actually pretty manageable. Think of this as your roadmap, guiding you from a simple question about your users to a data-backed answer that can genuinely move the needle. A good process keeps you focused, makes sure your test is solid, and gives you results you can trust.

Each step here builds on the one before it, creating a strong foundation for a successful campaign. From nailing down a smart hypothesis to making sense of the final numbers, this process will show you how different elements on your page actually work together to get people to convert.

Step 1: Formulate a Strong Hypothesis

Every great test starts with a question, not a tool. Your hypothesis is just an educated guess about what change will lead to a specific result, and—most importantly—why. A vague hypothesis like "let's test some buttons" is a recipe for useless results. You have to get specific.

A strong hypothesis is structured and rooted in some kind of data or observation. For instance: "We believe changing the headline to focus on benefits, swapping the product shot for a lifestyle image, and using an urgent CTA like 'Get It Now' will increase add-to-cart rates. We think this because user feedback suggests customers are motivated by seeing the product in a real-world context and respond to time-sensitive offers."

That kind of hypothesis has real power because it’s:

- Testable: You can easily create variations to prove or disprove it.

- Specific: It calls out the exact elements and the metric you’re trying to improve.

- Insightful: It’s built on an assumption about user behavior. Even if you're wrong, you learn something valuable.

Step 2: Select Elements and Create Variations

Once your hypothesis is locked in, it's time to pick which parts of the page you'll actually test. You'll want to focus on high-impact areas that are directly tied to your goal. On a product page, that might be the headline, the main image, and the call-to-action button. For a lead form, maybe it’s the form's length, its title, and the privacy policy text.

After you've picked your elements, you need to create meaningful variations for each one. These shouldn't be random. Each variation should represent a different angle on the user problem you identified in your hypothesis. For example, if your element is the headline, your variations could be:

- Variation A (Benefit-Driven): "Unlock Smoother Skin in 30 Days"

- Variation B (Social Proof): "Join 50,000+ Happy Customers"

Just remember, the total number of combinations is the number of variations for each element multiplied together. So, 2 headlines x 2 images x 3 buttons = 12 total combinations. You'll need to keep this number realistic based on your website's traffic.

Step 3: Calculate Sample Size and Set Up Your Test

This is where we get real about the stats. Before you hit "launch," you have to figure out if you even have enough traffic to get a reliable result in a decent amount of time. MVT is notoriously "traffic-hungry" because it has to split your audience across so many different combinations.

Use a sample size calculator (most testing platforms have one built-in) to estimate how many visitors you'll need for each combination to reach statistical significance. You should be aiming for 95% confidence. This calculation will set realistic expectations for how long the test needs to run. If the calculator says it'll take six months, you need to simplify your test by cutting down the number of variations.

Don't skip this step. Seriously. Launching a multivariate test on a low-traffic page is the single biggest mistake people make. You end up with tests that never finish, giving you zero useful information and wasting a ton of time.

Step 4: Monitor and Analyze the Results

Once your test is live, keep an eye on it for the first day or two. You're just making sure there are no technical glitches, like a broken variation that isn't loading correctly. After that, you have to be patient and let the data roll in. Fight the urge to peek at the results every day and call a winner too early.

When the test finally hits statistical significance, it’s time to dig in. Your testing tool will show you two main things: the winning combination and the impact of each individual element. But don't just stop at the winner. Look for the interaction effects. Did a certain headline only do well when it was paired with a specific image? This is where the real gold is. These deeper insights can inform your entire strategy for conversion rate optimization. Understanding these little nuances is how you turn a simple test into a long-term win.

Common MVT Mistakes and How to Avoid Them

Knowing what multivariate testing is is one thing; pulling one off without a hitch is another. Even pros can fall into common traps that waste time, burn through traffic, and spit out results that are just plain wrong. Getting ahead of these pitfalls is the difference between reliable insights and a failed campaign.

The biggest mistake we see? Testing too many variations on a low-traffic page. It’s an easy trap to fall into. You’ve got a bunch of great ideas for a headline, a new hero image, and a killer call-to-action, and you want to see how they all work together. The problem is, MVT needs a massive audience to work its magic.

Every new combination you add slices your traffic into smaller and smaller pieces. Without enough visitors flowing to each version, your test will take months—or even years—to reach statistical significance. By then, the results are probably obsolete anyway.

Overlooking Test Duration and Timing

Another classic blunder is calling the test the moment one variation pulls ahead. Early results are often just statistical noise, not a true reflection of user behavior. If you declare a winner too soon, you might implement a change that does nothing—or worse, actually hurts your conversions in the long run.

Always let your test run until it hits the sample size you calculated beforehand and reaches at least 95% statistical significance. This isn't just a suggestion; it's the only way to feel confident that your results are real and not just a fluke.

Context is also huge. Running a test over a major holiday or during a massive sales event will absolutely skew your data. Think about it: user behavior during Black Friday is a world away from a typical Tuesday in April.

To avoid this, make sure your test runs for at least one full business cycle, which for most companies is one to two weeks. This helps smooth out the daily ups and downs and gives you a much more accurate picture of how people will react under normal circumstances.

Ignoring the Deeper Insights

Finally, one of the most common missed opportunities is focusing only on the single winning combination and immediately moving on. Sure, finding the top performer is the main goal, but the real gold in multivariate testing is understanding the interaction effects. The data tells a story about why certain combinations clicked while others flopped.

Don't just declare a winner and call it a day. Dig into the results to learn:

- Which element had the biggest impact? Was it really the headline that moved the needle, or was the new CTA doing all the heavy lifting?

- Were there surprising interactions? Did that bold new headline only work when it was paired with a specific image?

- What can you learn from the losers? The combinations that bombed are just as valuable. They tell you exactly what your audience doesn't respond to.

When you analyze these subtle interactions, you get powerful insights into what your audience actually wants. It transforms a one-off test into a serious learning opportunity that can shape your entire optimization strategy from here on out.

Your MVT Questions, Answered

Even after getting the hang of what multivariate testing is, a few practical questions always pop up. It’s one thing to understand the theory, but another to feel confident putting it into practice. Let's clear up some of the common hurdles.

How Many Variations Can I Realistically Test?

The honest answer? It all comes down to your website traffic.

While it’s tempting to test everything at once, every new combination you add demands a bigger audience to get a reliable result. A good, practical starting point for most sites is testing two headline variations against two button variations. That creates four total combinations—a manageable number.

Most optimization platforms will tell you to stay under 12-16 combinations unless you have massive, enterprise-level traffic. This keeps your test from running forever and gives you actionable results before your audience or the market changes.

What Are the Best Tools for Multivariate Testing?

There are some great platforms out there with solid MVT features, and the best one for you really depends on your budget, team skills, and what you need it to connect with.

- For Large Enterprises: If you have the budget and need all the bells and whistles, tools like Optimizely and Adobe Target are the industry heavyweights. They're built for complex, large-scale testing.

- For SMBs: If you need powerful features without the enterprise price tag, platforms like VWO (Visual Website Optimizer) and Convert Experiences are fantastic options. They offer robust MVT functionality that’s much more accessible.

Should I Run a Multivariate Test on a Low-Traffic Page?

We strongly recommend against it. MVT works by splitting your traffic into many different little slices, so it needs a ton of visitors to produce a trustworthy result in a reasonable amount of time. On a low-traffic page, a test could drag on for months—or even years.

For pages with limited traffic, A/B testing is a much better fit. It focuses your entire audience on just two versions, so you’ll reach a statistically significant answer way faster. You could also gather qualitative feedback from user surveys or heatmaps to guide your changes.

At Up North Media, we live and breathe data-driven strategies that turn website visitors into customers. If you’re ready to stop guessing and start implementing powerful optimization campaigns that deliver real results, we’re here to help. Learn more about our conversion rate optimization services and schedule your free consultation today!