A well-designed database is the unsung hero of any successful web application, silently ensuring speed, reliability, and scalability. It's the architectural blueprint that determines whether your app can handle a sudden surge in traffic or gracefully evolve with new features. For SMBs and e-commerce platforms, getting this foundation right isn't just a technical detail, it's a competitive advantage. Poor design inevitably leads to slow queries, data corruption, and maintenance nightmares that can cripple growth and frustrate users.

In contrast, following proven database design best practices creates a robust, efficient, and secure backbone for your business operations. A solid schema prevents data inconsistencies, simplifies development, and makes future updates significantly less painful. It’s the difference between an application that feels sluggish and unreliable and one that is responsive, accurate, and ready to scale alongside your business. This foundational work directly impacts everything from customer checkout speed to inventory management accuracy.

This guide cuts through the noise to provide a prioritized, actionable roundup of the most critical practices you need to implement. We will move beyond theory and dive into practical steps with concrete examples tailored to the real-world challenges faced by e-commerce retailers and growing businesses. You will learn how to properly structure your data, enforce integrity with constraints, optimize for performance with indexing, and plan for future growth and maintenance. By the end, you’ll have a clear roadmap to building a database that not only supports your application today but also sets it up for long-term success.

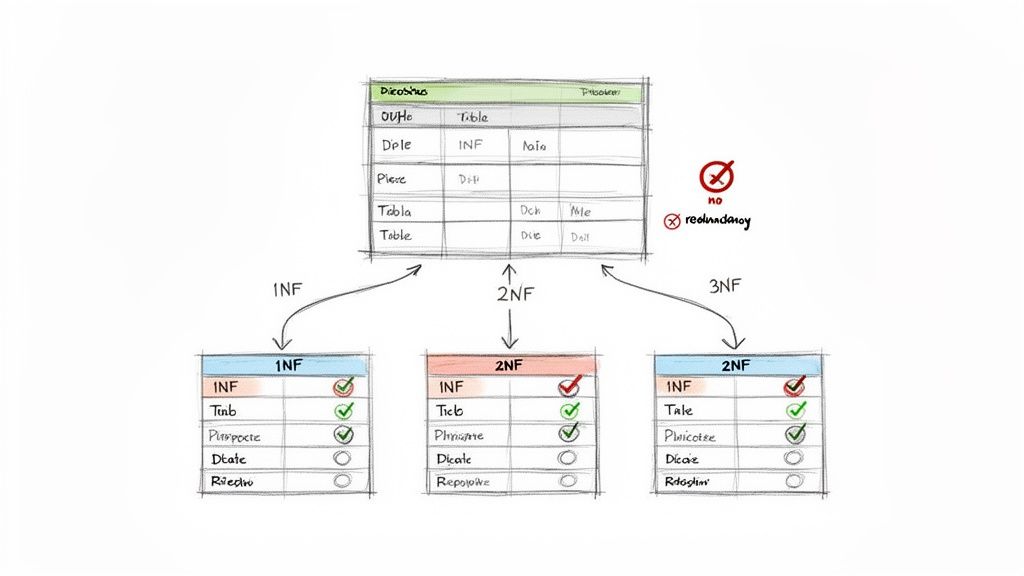

1. Database Normalization (Normal Forms)

Normalization is a cornerstone of relational database design, serving as a systematic process to organize tables and minimize data redundancy. The core goal is to ensure data is stored logically and efficiently by decomposing large, unwieldy tables into smaller, well-structured relations. This process follows a series of guidelines known as normal forms, most commonly First (1NF), Second (2NF), and Third (3NF) Normal Form. Following these principles is a fundamental aspect of robust database design best practices.

By isolating data into distinct tables, normalization prevents modification anomalies. For instance, if a customer's address is stored with every order they place, changing that address requires updating multiple records, creating a high risk of inconsistency. Normalization solves this by storing customer information in a separate Customers table, linked to an Orders table via a customer ID. This ensures data integrity, reduces wasted storage, and simplifies data management.

Why Normalization Matters

In an e-commerce application, a normalized schema would separate concepts into distinct tables: Customers, Products, Orders, and Order_Items. This structure is highly efficient for transactional operations (OLTP systems) where data accuracy and consistency are paramount. It prevents a single product name update from requiring changes across thousands of order records, which would be both slow and error-prone.

Actionable Implementation Tips

To effectively apply normalization, follow these targeted guidelines:

- Aim for 3NF: For most web applications, especially those handling transactional data like e-commerce or business operations, achieving Third Normal Form is the ideal balance. It eliminates the most common data anomalies without introducing excessive complexity.

- Document Dependencies: Before designing your schema, clearly map out the functional dependencies between data attributes. This a fancy way of saying, "What data depends on what other data?". For example,

product_pricedepends onproduct_id, notorder_id. - Balance with Performance: While normalization is crucial for data integrity, highly normalized schemas can sometimes lead to complex queries requiring many joins, which can slow down read-heavy operations. Be prepared to use denormalization strategically for specific, performance-critical reporting queries.

2. Primary Key and Unique Constraints

Primary keys are the unique identifiers for each record in a database table, serving as the absolute address for a specific row. A primary key cannot contain NULL values and must be unique across the entire table. Complementing this, unique constraints also enforce uniqueness on a column or set of columns but can allow one NULL value. Together, they are essential tools for maintaining entity integrity and are foundational to building reliable relational databases.

This enforcement of uniqueness is a critical element of strong database design best practices. It prevents duplicate data at the source, ensuring that every entity, whether a customer or an order, is singularly identifiable. This uniqueness forms the bedrock for creating relationships between tables, allowing an Orders table to accurately reference a specific user in the Customers table via their primary key. Without these constraints, data becomes ambiguous and unreliable.

Why Keys and Constraints Matter

In a banking application, an auto-incrementing integer might serve as the primary key for the Accounts table. While efficient for joins and indexing, the bank account number, which is a natural business identifier, would be an excellent candidate for a unique constraint. This ensures no two accounts can have the same account number, preserving data integrity, while the stable, internal primary key handles the relational logic behind the scenes.

Actionable Implementation Tips

To implement keys and constraints effectively, consider these best practices:

- Prefer Surrogate Keys: Use system-generated, non-business keys (like auto-incrementing integers or UUIDs) as primary keys. These "surrogate keys" are stable and unaffected by changes in business rules, unlike "natural keys" such as an email address or product SKU, which might change over time.

- Use UUIDs for Distributed Systems: In microservices or distributed architectures where multiple systems might generate records independently, use Universally Unique Identifiers (UUIDs) as primary keys to avoid ID collisions.

- Keep Primary Keys Small: A smaller data type for your primary key (e.g.,

INTvs.BIGINTor a long string) leads to more compact and faster indexes, not just on the primary table but on all foreign keys that reference it. - Document the Natural Key: Even when using a surrogate primary key, identify and apply a unique constraint to the column(s) that form the natural business key (e.g.,

user_email,isbn_number) to enforce business rules and prevent logical duplicates.

3. Foreign Key Constraints and Referential Integrity

Foreign key constraints are the ligaments of a relational database, linking tables together and enforcing crucial business rules. A foreign key in one table points to the primary key of another, creating a relationship that ensures the data between them remains consistent and valid. This enforcement mechanism, known as referential integrity, is a pillar of reliable database design best practices.

By establishing these constraints, you prevent "orphaned" records, such as an order that references a non-existent customer. The database itself rejects any insert or update that would violate this relationship, safeguarding data quality at the most fundamental level. For example, an Orders table would have a customer_id column that is a foreign key referencing the id in the Customers table, guaranteeing every order is tied to a real customer.

Why Referential Integrity Matters

In an HR system, referential integrity prevents an employee from being assigned to a department that doesn't exist. The employees table's department_id would be a foreign key pointing to the departments table. Attempting to add an employee with an invalid department_id would fail, which is exactly the desired behavior to maintain a clean, trustworthy dataset. This automatic validation is far more robust than relying on application-level checks alone.

Actionable Implementation Tips

To effectively implement foreign keys and maintain referential integrity, follow these guidelines:

- Define Constraints at the Database Level: Always declare foreign key constraints within your database schema (using

CREATE TABLEorALTER TABLE). Do not rely solely on your application logic to enforce these rules, as this can be bypassed. - Choose Cascade Actions Deliberately: Decide what happens when a referenced record is deleted or updated. Use

ON DELETE CASCADEto automatically delete related records (e.g., deleting a customer deletes their orders) orON DELETE SET NULLto nullify the reference, but only if your business logic allows it.RESTRICTis the safest default. - Index Your Foreign Key Columns: Foreign key columns are frequently used in

JOINoperations. Adding an index to these columns is one of the most effective ways to boost query performance, especially as your dataset grows.

4. Data Type Selection and Consistency

Choosing the correct data type for each column is a foundational yet critical step in database design. This practice directly impacts data integrity, storage efficiency, and query performance. By assigning the most appropriate data type, you enforce constraints at the database level, ensuring that, for example, a date column only accepts valid dates, and a numeric column only accepts numbers. This precision is a key element of effective database design best practices.

Inconsistent or poorly chosen data types can lead to subtle bugs and significant performance degradation. For instance, storing monetary values as a FLOAT can introduce rounding errors, while using a generic TEXT type for a fixed-length postal code wastes space and slows down indexing. Selecting specific types like DECIMAL for currency or VARCHAR(10) for a postal code not only prevents invalid data but also allows the database to optimize storage and retrieval operations, making the entire application faster and more reliable.

Why Data Type Selection Matters

In an e-commerce platform, storing product prices requires precision. Using DECIMAL(10,2) ensures that all financial calculations are exact, preventing the small but cumulative errors that can occur with floating-point numbers. Similarly, using BIGINT for the primary key in a high-volume Orders table anticipates future growth, preventing the system from running out of unique IDs, which would be a catastrophic failure for a growing business.

Actionable Implementation Tips

To apply this practice effectively, use these targeted guidelines:

- Use

DECIMALfor Financial Data: Always use theDECIMALorNUMERICdata type for currency and other values where exact precision is non-negotiable. AvoidFLOATorDOUBLEfor monetary calculations. - Choose Integers Wisely: Use

INTfor primary keys in most tables. For tables expected to exceed two billion rows, such as event logs or transactions in a massive system, be proactive and useBIGINTfrom the start. - Prefer

VARCHAROverTEXT: When you know the maximum length of a string, useVARCHAR(n). This is more storage-efficient and performs better than genericTEXTorCLOBtypes. ReserveTEXTfor genuinely long-form, unstructured content. - Use Specific Date and Time Types: Employ

TIMESTAMP WITH TIME ZONEfor applications that operate across multiple regions to avoid ambiguity. UseDATEfor columns that only need the date part, like a birthdate.

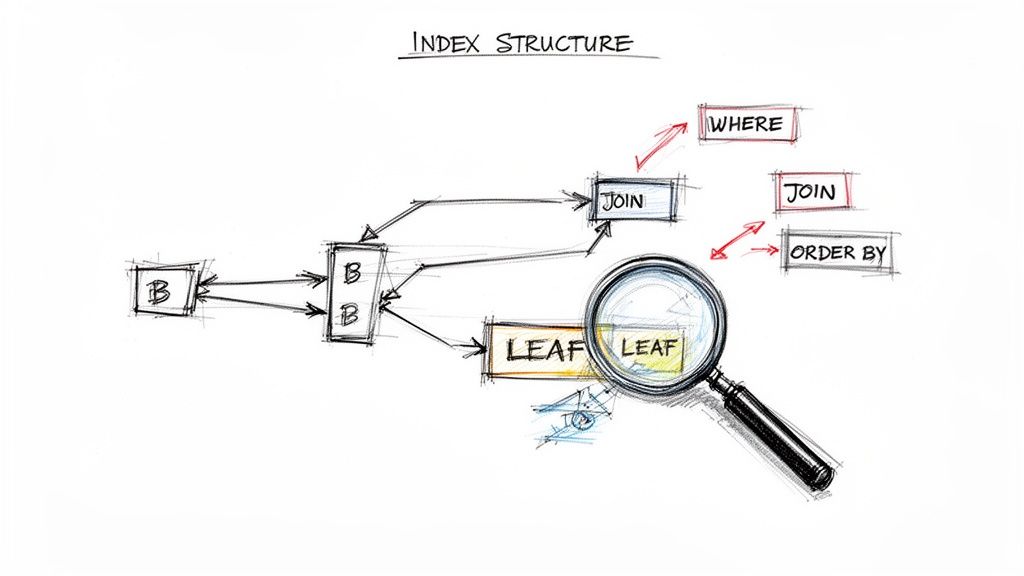

5. Indexing Strategy and Performance Optimization

An index is a database structure that provides quick lookup of data in a table, much like an index in a book helps you find information without reading every page. A well-designed indexing strategy is a critical component of database design best practices, as it dramatically speeds up data retrieval operations (queries) by reducing the number of disk reads required. However, this performance gain comes at a cost: indexes consume storage space and add overhead to write operations (INSERT, UPDATE, DELETE), as the index must also be updated.

The core challenge is to strike a balance between accelerating read queries and minimizing the performance penalty on writes. For example, in an e-commerce application, placing an index on the product_id column in an Orders table allows the system to instantly find all orders for a specific product, a common and crucial operation. Without it, the database would have to scan the entire table, which could be millions of rows, leading to slow performance and a poor user experience.

Why Indexing Matters

In a social media platform, users frequently search for content. A full-text index on post content enables fast, keyword-based searches that would otherwise be prohibitively slow. Similarly, a banking application running a query for a customer's recent transactions benefits immensely from a composite index on (account_id, transaction_date), which efficiently filters and sorts the data in a single operation. Proper indexing directly impacts application responsiveness and scalability.

Actionable Implementation Tips

To build an effective indexing strategy that enhances performance without creating unnecessary overhead, follow these guidelines:

- Be Selective: Index columns that are frequently used in

WHEREclauses,JOINconditions, andORDER BYclauses. These are the operations that benefit most from an index. - Avoid Over-Indexing: Each index requires maintenance during write operations and consumes disk space. Creating too many indexes, especially on tables with heavy write loads, can degrade overall performance.

- Use Composite Indexes: For queries that filter on multiple columns, create a composite (multi-column) index. The order of columns in the index matters, so place the most selective column first.

- Monitor and Prune: Regularly use database tools like

EXPLAINor query execution plans to analyze which indexes are being used. Remove unused indexes to reduce maintenance overhead and free up storage.

Beyond just indexing, understanding how to effectively improve SQL query performance is crucial for peak database operation. You can learn more about optimizing SQL queries for peak performance. For a deeper dive into overall database health, explore these database management best practices.

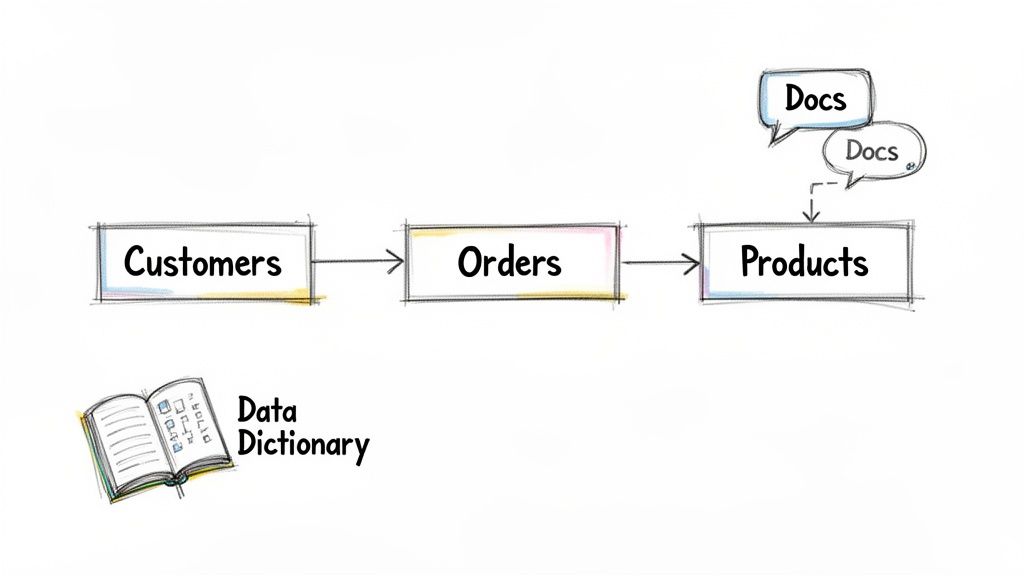

6. Documentation and Schema Communication

An undocumented database is a ticking time bomb for any development team. Comprehensive documentation of schema design, table relationships, and business rules is a critical, yet often overlooked, component of sustainable development. It acts as a single source of truth, ensuring that developers, DBAs, and business stakeholders share a common understanding of the data landscape. This practice is essential for effective database design best practices, as it dramatically reduces onboarding time and prevents costly misunderstandings.

Clear communication through documentation ensures system longevity and simplifies maintenance. When a developer leaves a project, a well-documented schema allows the next person to understand the "why" behind design decisions, not just the "what." For example, knowing why a specific column is nullable or why a certain data type was chosen can prevent future bugs and maintain data integrity. This practice is a cornerstone of professional software engineering and aligns with broader best practices for web development.

Why Documentation Matters

In an e-commerce platform, thorough documentation might include an Entity-Relationship Diagram (ERD) that visually maps the Customers, Orders, and Products tables. A corresponding data dictionary would define each column, such as specifying that Orders.status can only contain values like 'pending', 'shipped', or 'cancelled'. This prevents developers from introducing invalid states and ensures business logic is consistently enforced across the application.

Actionable Implementation Tips

To create documentation that empowers your team, implement these strategies:

- Automate Where Possible: Use tools like dbdocs.io or SchemaCrawler to automatically generate documentation from your live database schema. This keeps the documentation current with minimal manual effort.

- Document the 'Why': Don't just list a column's name and data type. Explain its business purpose. For example, instead of just "is_active (boolean)", write "is_active: Set to false to soft-delete a user without losing their order history."

- Keep Docs with Code: Store your schema diagrams, data dictionaries, and other database documentation within your project's version control repository (e.g., Git). This makes it a natural part of the development workflow.

- Create a Data Dictionary: Maintain a central document or tool that defines every table and column. Include data types, constraints, default values, and a plain-language description of its purpose.

7. Denormalization for Performance (Strategic Redundancy)

Denormalization is the intentional process of introducing controlled redundancy into a database to boost read performance. While it seems counterintuitive after focusing on normalization, it's a critical optimization technique. This deliberate trade-off sacrifices some storage efficiency and data integrity simplicity to reduce complex, slow-running queries. Applying this strategy is a key part of advanced database design best practices, especially in systems where query speed is paramount.

Unlike the accidental redundancy found in poorly designed databases, strategic denormalization is a calculated decision. For example, an e-commerce platform might store a pre-calculated order_total in its Orders table, even though this value can be derived by summing items from the Order_Items table. This avoids a costly join and aggregation every time an order history page is loaded, dramatically improving user experience.

Why Denormalization Matters

In read-heavy applications like analytics dashboards or social media feeds, normalized schemas can lead to prohibitively slow performance due to the sheer number of joins required. Denormalization pre-computes and stores these results. A social media app might store a comment_count on a Posts table instead of counting related records in real-time. This ensures the feed loads instantly, even for posts with thousands of comments. When optimizing for performance in data warehousing, it's crucial to understand the implications of different models like the Star Schema vs. Snowflake Schema, which inherently use denormalization principles to speed up reporting.

Actionable Implementation Tips

To apply denormalization effectively without compromising your data, follow these guidelines:

- Denormalize Reactively: Only denormalize after identifying a specific performance bottleneck through query profiling. Do not denormalize speculatively during the initial design phase.

- Use Triggers or Jobs: Implement database triggers or background jobs to keep redundant data synchronized. For example, a trigger on the

Order_Itemstable can update theorder_totalin theOrderstable whenever an item is added or removed. - Document Everything: Clearly document all denormalized fields, their source data, and the mechanism used to keep them updated. This is crucial for future maintenance and troubleshooting.

- Verify the Benefit: Always measure query performance before and after implementing denormalization to confirm that the performance gain justifies the added complexity and redundancy.

8. Audit Columns and Historical Tracking

Implementing audit columns and historical tracking is a critical practice for maintaining data integrity, ensuring accountability, and enabling robust system analysis. This involves adding specific metadata columns to your tables, such as created_at, updated_at, created_by, and updated_by, to record when a record was made and last modified, and by whom. This technique creates a transparent log of data changes directly within your schema, forming a vital part of secure and compliant database design best practices.

By embedding this history into your tables, you gain the ability to answer crucial questions about your data's lifecycle. If an order status is unexpectedly changed or customer information is altered, audit columns provide an immediate, undeniable trail. This is essential for debugging application logic, satisfying regulatory compliance like HIPAA or GDPR, and understanding user behavior over time. It transforms your database from a static repository into a dynamic system with a complete, traceable history.

Why Historical Tracking Matters

In a healthcare application, maintaining an immutable history of patient record changes is a legal requirement. Audit columns and historical tracking would show exactly which practitioner updated a patient's allergy information and when, providing a clear audit trail for compliance. Similarly, in an e-commerce platform, tracking inventory level changes or order modifications helps resolve customer disputes and identify potential system bugs or fraudulent activity.

Actionable Implementation Tips

To effectively implement historical tracking, follow these targeted guidelines:

- Automate with Triggers: Use database triggers to automatically populate

created_atandupdated_atcolumns. This removes the burden from your application code and guarantees that the timestamps are always accurate and consistently applied. - Use Database Timestamps: Always rely on the database's internal clock functions (e.g.,

CURRENT_TIMESTAMP,NOW()) rather than passing timestamps from the application. This prevents inconsistencies caused by server clock drift or timezone misconfigurations. Use UTC for all timestamps to create a universal standard. - Implement Soft Deletes: Instead of permanently deleting records, use a flag like

is_deletedordeleted_at. This "soft delete" approach preserves the record for historical analysis and allows for easy data recovery, which is invaluable for business intelligence and support operations. - Consider Separate Audit Tables: For high-volume transactional tables, logging every change in a separate audit or history table can prevent the primary table from bloating. This keeps the active dataset lean and performant while retaining a full, detailed history for analysis.

9. Schema Versioning and Migration Management

As web applications evolve, their underlying database schemas must change to support new features or optimizations. Schema versioning and migration management is a systematic approach to applying these changes in a controlled, repeatable, and reversible way. It treats database schema changes as code, allowing them to be version-controlled alongside the application source code. This practice is essential for maintaining consistency across development, testing, and production environments, forming a critical pillar of modern database design best practices.

Without a managed migration process, database changes are often applied manually, leading to disastrous inconsistencies. For example, a developer might add a new last_login_ip column to their local database but forget to apply it to the production environment, causing the application to crash upon deployment. Migration management solves this by codifying the change into a script that can be automatically and reliably executed everywhere. This ensures data integrity, prevents deployment failures, and simplifies collaboration within development teams.

Why Schema Versioning Matters

In an e-commerce platform undergoing rapid development, new features constantly require schema alterations, like adding a gift_wrap_option column to the Orders table. Using a migration tool ensures this change is applied consistently across all developer machines, the staging server, and finally, the production database. This disciplined process prevents the "it works on my machine" problem and is fundamental to supporting continuous integration and continuous deployment (CI/CD) pipelines, enabling faster, more reliable feature releases.

Actionable Implementation Tips

To effectively manage schema evolution, follow these targeted guidelines:

- Use a Dedicated Migration Tool: Adopt established tools like Flyway (for Java), Alembic (for Python/SQLAlchemy), or the built-in migration systems in frameworks like Django or Rails. These tools automate the process of tracking and applying migrations.

- Write Reversible Migrations: Always create both an "UP" migration (to apply the change) and a "DOWN" migration (to revert it). This is a lifesaver when a deployment needs to be rolled back quickly.

- Keep Migrations Small and Atomic: Each migration file should address a single, specific concern, such as adding one column, creating one index, or renaming a table. This makes migrations easier to test, debug, and manage.

- Plan for Zero-Downtime Deployments: For critical production systems, use strategies like creating new columns as nullable first, deploying code that can handle both states, and then enforcing

NOT NULLconstraints in a subsequent migration. This avoids locking tables and causing application downtime.

10. Security Design and Access Control

Database security is not an afterthought; it is a critical component that must be integrated directly into the schema design phase. It involves a multi-layered approach of authentication, authorization, encryption, and auditing to safeguard sensitive information. Proactively designing for security ensures that data is protected from unauthorized access, modification, or disclosure, which is a fundamental requirement for building trust and meeting regulatory compliance like GDPR or HIPAA. This approach is a core pillar of modern database design best practices.

By embedding security measures into the database structure itself, you create a robust defense-in-depth strategy. Instead of relying solely on application-level checks, you enforce rules at the data layer, the last line of defense. For instance, in a multi-tenant SaaS application, row-level security can ensure that one tenant's data is completely invisible to another, preventing catastrophic data leaks even if an application bug exists. This builds a resilient and secure foundation for any web application.

Why Security by Design Matters

In a healthcare application, robust access control is non-negotiable. A well-designed schema would use row-level security to ensure a doctor can only view records for patients under their care, and column-level encryption would protect highly sensitive fields like Social Security Numbers. This prevents broad data exposure and limits the impact of a potential breach. Similarly, an e-commerce platform must restrict access to personally identifiable information (PII) to only authorized customer service roles, a rule best enforced at the database level. To dive deeper into this topic, you can learn more about comprehensive database security best practices.

Actionable Implementation Tips

To effectively integrate security into your database design, implement these specific guidelines:

- Enforce Least Privilege: Grant database users and roles the absolute minimum permissions required to perform their jobs. If a service only needs to read data, give it

SELECTpermissions and nothing more. - Use Database Roles: Instead of assigning permissions to individual user accounts, create roles like

readonly,data_analyst, orsupport_agent. This simplifies permission management and reduces the risk of error. - Encrypt Sensitive Data: Always encrypt sensitive data at rest. Use column-level encryption for specific fields like financial account numbers or API keys. Never store raw passwords; use a strong, salted hashing algorithm like bcrypt.

- Implement Row-Level Security (RLS): For multi-tenant applications or systems with complex user hierarchies, use RLS to filter data automatically based on the current user's role or attributes, ensuring users only see the data they are authorized to see.

10-Point Database Design Best Practices Comparison

| Item | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Database Normalization (Normal Forms) | 🔄 Medium–High — careful schema decomposition and dependency analysis | ⚡ Moderate dev time; modest runtime costs but more joins | 📊 High integrity, reduced redundancy; may increase read-time due to joins | 💡 OLTP systems (e‑commerce, banking, healthcare) | ⭐ Strong consistency, prevents anomalies, easier maintenance |

| Primary Key and Unique Constraints | 🔄 Low — straightforward to define and enforce | ⚡ Low — small index & storage overhead | 📊 Guarantees uniqueness and efficient lookups | 💡 Every relational table; foundation for FK relationships | ⭐ Ensures record uniqueness, enables indexing and referential integrity |

| Foreign Key Constraints and Referential Integrity | 🔄 Low–Medium — define relations and cascade rules | ⚡ Low–Medium — write-path overhead, needs FK indexing | 📊 Enforced referential integrity; prevents orphaned records | 💡 Parent‑child relations (orders→customers, enrollments) | ⭐ Database‑level relationship enforcement, reduces app validation |

| Data Type Selection and Consistency | 🔄 Medium — requires analysis of data characteristics | ⚡ Low — affects storage efficiency and query speed | 📊 Accurate storage, fewer conversions, improved performance | 💡 All schemas; especially high‑volume and financial systems | ⭐ Optimizes storage & performance, prevents type errors |

| Indexing Strategy and Performance Optimization | 🔄 Medium–High — requires query analysis and tuning | ⚡ High — storage and write/maintenance overhead | 📊 Dramatic query speed improvements for targeted queries | 💡 Read‑heavy workloads, search, reporting, hot paths | ⭐ Significant read performance gains; supports sorting & joins |

| Documentation and Schema Communication | 🔄 Low–Medium — initial effort plus ongoing updates | ⚡ Low–Moderate — tooling and maintenance time | 📊 Better onboarding, fewer mistakes, clearer change rationale | 💡 Teams, large projects, regulated environments | ⭐ Improves collaboration, auditability, and maintainability |

| Denormalization for Performance (Strategic Redundancy) | 🔄 Medium — strategic trade‑offs and sync mechanisms | ⚡ Moderate–High — extra storage and update complexity | 📊 Faster reads, simpler queries; increased consistency risk | 💡 Analytics, reporting, read‑heavy services with slow joins | ⭐ Significant query speedups for specific use cases |

| Audit Columns and Historical Tracking | 🔄 Low–Medium — add audit fields or tables, triggers | ⚡ Moderate — additional storage, indexing, some perf cost | 📊 Traceability, recovery, compliance; supports temporal queries | 💡 Regulated domains, debugging, systems requiring lineage | ⭐ Enables accountability, compliance, and historical analysis |

| Schema Versioning and Migration Management | 🔄 Medium–High — process discipline and tooling needed | ⚡ Moderate — CI/CD, test environments, migration effort | 📊 Reproducible, auditable schema changes with rollback options | 💡 Evolving products, teams practicing continuous delivery | ⭐ Safe, repeatable migrations and environment parity |

| Security Design and Access Control | 🔄 High — policies, roles, encryption, auditing design | ⚡ High — operational overhead and possible perf impact | 📊 Strong protection and compliance; reduces breach risk | 💡 Sensitive data systems, multi‑tenant SaaS, regulated sectors | ⭐ Fine‑grained access control, encryption, regulatory compliance |

From Blueprint to Reality: Implementing Your Database Strategy

We have journeyed through the core tenets of effective data architecture, exploring the critical database design best practices that distinguish a fragile application from a resilient, high-performance one. From the foundational logic of normalization to the strategic application of denormalization, each practice serves a distinct purpose in building a system that is both powerful and maintainable. The path from a theoretical blueprint to a functional, real-world database is paved with deliberate choices and a deep understanding of these principles.

Mastering these concepts transforms your database from a simple storage container into a strategic business asset. A well-designed database is the bedrock of a scalable e-commerce platform, the engine of a content-rich publishing site, and the reliable backbone for any custom web application. It directly impacts user experience through faster load times, ensures data integrity for accurate business reporting, and enhances security to build customer trust.

Synthesizing the Core Principles

Let's distill the most crucial takeaways from our exploration. Think of these as the non-negotiable pillars of a sound database strategy:

- Structure First, Speed Second: Always begin with a normalized schema (up to 3NF). This enforces data integrity and provides a clean, logical foundation. Performance optimizations like indexing and strategic denormalization should be applied thoughtfully afterward, based on real-world query patterns, not premature assumptions.

- Constraints are Your Allies: Don't treat Primary Keys, Foreign Keys, and Unique constraints as optional. They are your database's first line of defense against inconsistent and corrupted data. Enforcing referential integrity at the database level prevents a wide range of application-level bugs and ensures your data relationships remain valid.

- Indexing is an Art and a Science: An index is not a magic bullet for performance. Over-indexing can degrade write performance just as under-indexing can cripple read speeds. The key is to analyze your application's most frequent and costly queries and create targeted, composite indexes that serve those specific access patterns.

- Documentation is Not an Afterthought: A database without clear documentation and a schema versioning strategy is a ticking time bomb. Future developers (or even you, six months from now) will need this context to make safe, effective changes. Treat your schema documentation and migration scripts with the same care as your application code.

Your Actionable Path Forward

Moving from theory to implementation requires a methodical approach. For the small and mid-sized businesses we work with, from Omaha startups to established e-commerce retailers, putting these practices into action is what drives real growth and stability.

Here are your next steps:

- Audit Your Current Schema: If you have an existing database, review it against the principles discussed. Are your data types appropriate? Do you have unenforced relationships? Are your indexes being used effectively? Use your database's query analysis tools to identify performance bottlenecks.

- Establish a Naming Convention: If you don't have one, create one now. A consistent and descriptive naming convention for tables, columns, and constraints makes the schema instantly more understandable and easier to maintain.

- Implement a Migration Tool: Adopt a schema migration tool like Flyway or Liquibase. This brings version control and discipline to your database changes, making deployments repeatable, reversible, and far less risky.

- Prioritize Security from Day One: Review your access control policies. Follow the principle of least privilege, ensuring that application users and services have only the permissions they absolutely need to function. This simple step is a cornerstone of robust security design.

Ultimately, adopting these database design best practices is an investment in your application's future. It’s about building a foundation that can withstand evolving business requirements, handle increasing user loads, and empower your organization with clean, reliable data. This is not just a technical exercise; it's a fundamental business decision that pays dividends in scalability, reduced maintenance costs, and enhanced operational agility.

For Omaha-based businesses and beyond, translating these principles into a high-performance web application requires specialized expertise. The team at Up North Media lives and breathes these best practices, building custom, scalable data architectures that power growth for e-commerce and content-driven platforms. If you're ready to build a web application on a foundation designed for success, schedule a free consultation with Up North Media today.