"Serverless" is one of those tech terms that sounds like a flat-out lie. How can you possibly run an application without servers? The name is a bit of a misnomer, but the idea behind it is simple: you don't have to manage them. With serverless, the headache of provisioning, scaling, and maintaining infrastructure gets handed off from your team to a cloud provider.

What Is Serverless Architecture Really

Let's use an analogy. Managing traditional servers is like owning a restaurant. You’re on the hook for everything—buying the ovens, staffing the kitchen, paying the electric bill, and mopping the floors. If a massive tour bus pulls up, you might not have the capacity to serve everyone. On a slow Tuesday night, you're still paying to keep the lights on and the whole kitchen running.

Serverless architecture is like hiring a catering company for your event. You just give them the menu (your code) and tell them when guests arrive (an event trigger). The caterer handles everything else: finding the right-sized kitchen, bringing in staff, cooking the food, and cleaning up. You only pay for the plates of food that are actually served, not for the kitchen's downtime.

This model is a huge leap from older approaches. To really get the full picture, it helps to understand the foundational layer it's built on, so check out our guide on what is cloud hosting.

To put it in simple terms, serverless is all about abstracting away the boring parts of infrastructure so you can focus on building things that matter. Here’s a quick breakdown of what that means in practice.

Serverless Architecture At a Glance

This table breaks down the core concepts that make serverless a different way of thinking about application development.

| Core Principle | What It Means for You |

|---|---|

| No Server Management | You never have to patch, update, or provision a server again. The cloud provider handles it. |

| Event-Driven Execution | Your code doesn't run unless something specific happens (an event), saving you money. |

| Pay-Per-Use Billing | You are only billed for the exact compute time your code uses, down to the millisecond. |

| Automatic Scaling | The provider automatically scales resources up or down to match demand, from zero to thousands of requests. |

These principles work together to create an environment where you can build and deploy applications faster and more efficiently than ever before.

The Core Idea: An Event-Driven Approach

The "magic" that makes serverless work is its event-driven nature. Instead of a server sitting idle 24/7, waiting for something to happen, serverless functions are completely dormant until a specific event triggers them.

That trigger could be almost anything:

- A user clicking "submit" on a contact form (an API request).

- A new photo being uploaded to a storage bucket.

- A customer record being updated in your database.

- A scheduled task that needs to run at the top of every hour.

Once that trigger fires, the cloud provider instantly spins up the resources needed, runs your code, and shuts it all down the moment it's done. This incredibly efficient, on-demand execution is exactly why so many companies are adopting a serverless first mindset.

In short, serverless computing takes the server completely out of the developer's mind. They can focus purely on writing code that delivers business value, while the cloud provider manages all the underlying complexity of resource allocation and scaling.

A Rapidly Growing Market

This shift has been more than just a trend; it's fueling massive growth. The global serverless architecture market was valued at around $3.1 billion back in 2017. Fast forward to today, and projections show it hitting nearly $22 billion by 2025.

That’s a compound annual growth rate of about 27.8%. It's clear that serverless has moved from a niche concept to a central pillar of modern cloud strategy for businesses of all sizes.

Understanding FaaS And BaaS: The Two Pillars Of Serverless

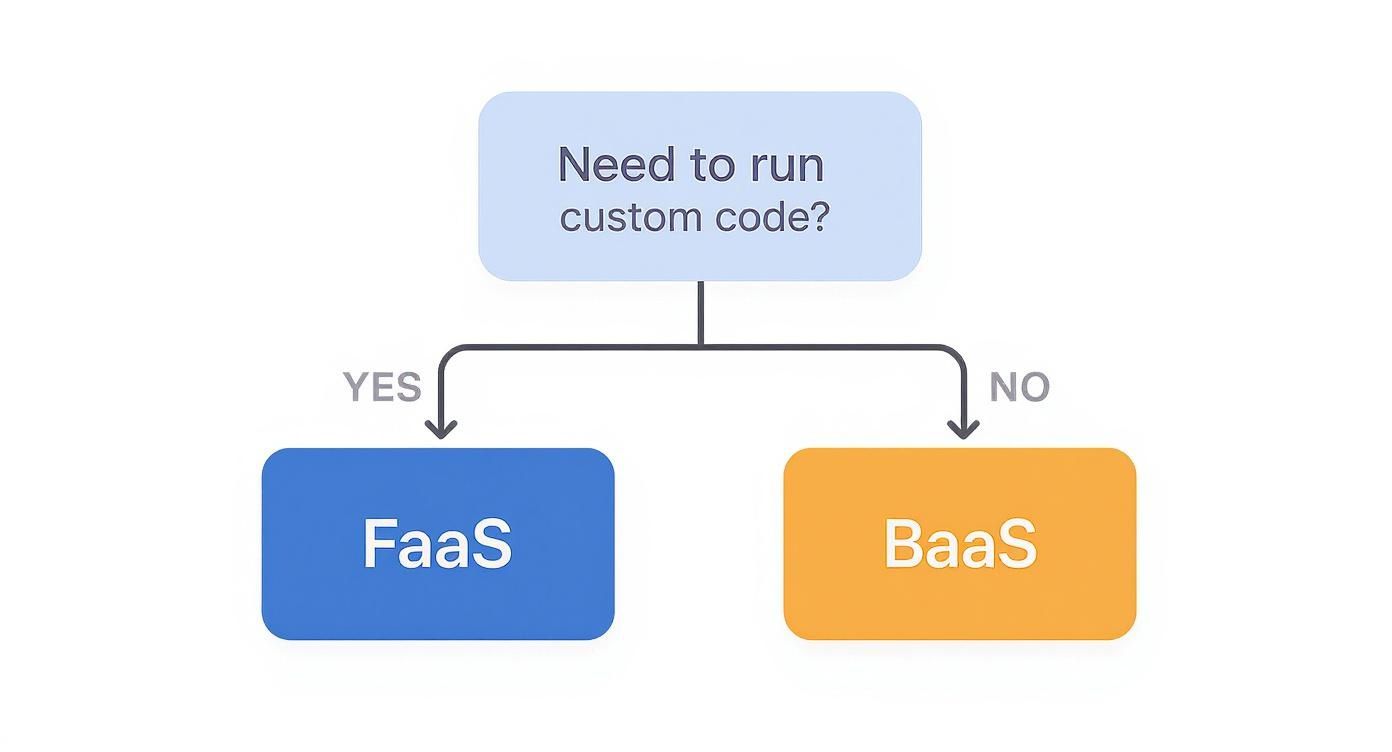

To really get your head around serverless, you need to understand its two core parts: Function-as-a-Service (FaaS) and Backend-as-a-Service (BaaS). They work together, but they solve completely different problems. The key is to think of them as separate but complementary pieces that unlock the real power of building modern applications.

One pillar handles all your custom code, while the other gives you a toolkit of ready-made backend features. This setup lets development teams move way faster and spend more time building unique stuff for their users.

FaaS: The On-Demand Compute Engine

Function-as-a-Service (FaaS) is the engine of the serverless world. This is where your actual code runs. The best way to think about it is like a motion-activated security light. The light doesn’t burn all night wasting electricity; it only flicks on for the few seconds it detects movement (an event).

That’s exactly how a FaaS function works. It sits there, doing nothing, until a specific event triggers it. That trigger could be almost anything:

- An API call when a user clicks "buy now" on your e-commerce site.

- A new file upload to a storage bucket, like a user adding a profile picture.

- A database change, like a new customer signing up.

- A scheduled timer that kicks off a report every morning at 8 AM.

The moment that trigger happens, the cloud provider instantly spins up the resources, runs your little piece of code (the function), and then shuts it all down. You only pay for the fraction of a second the function was actually running. This model wipes out the cost of paying for idle servers.

BaaS: The Pre-Built Backend Toolkit

Backend-as-a-Service (BaaS) is the second pillar. Think of it as a collection of managed, off-the-shelf services that handle all the common backend chores. It’s like building a house with pre-fabricated components. Instead of building your own doors, windows, and plumbing from scratch, you just buy high-quality, ready-made ones.

BaaS does the same thing for software. Instead of spending months building and maintaining complex backend systems, your team can plug in managed services for things like:

- Authentication: Services like AWS Cognito or Auth0 handle all the user sign-up, login, and security headaches.

- Databases: Managed databases like Amazon DynamoDB or Google Firestore give you scalable storage without ever touching a server.

- File Storage: Services like Amazon S3 provide a simple way to store and retrieve photos, videos, or any other user content.

- Push Notifications: Let a provider manage the complicated infrastructure needed to send alerts to your users' phones.

By combining FaaS for your unique business logic and BaaS for the standard backend plumbing, you create a powerful, scalable application with a fraction of the traditional development effort. This is the core strategy behind a modern serverless architecture.

This approach fits neatly into the broader world of cloud computing. If you want to get a better sense of the different service layers, this guide to cloud solutions for business (IaaS, PaaS, SaaS) offers some great context on how serverless builds on these foundational ideas.

Ultimately, FaaS gives you the power to run custom code without servers, while BaaS frees you from reinventing the wheel on standard backend tasks. Together, they let small teams and startups right here in Omaha build sophisticated apps that can compete on a global scale—all without a dedicated infrastructure team. This powerful combo is why serverless isn't just a buzzword; it's a defining model in cloud development.

Choosing Your Path: Serverless vs. Containers and VMs

To really get what serverless architecture is all about, it helps to see how it stacks up against the other ways folks have been building and running software. For years, businesses in Omaha and everywhere else have leaned on virtual machines (VMs) and, more recently, containers. Each one is a different trade-off between how much control you have, how easy it is to manage, and what it costs you.

I like to think of it like finding a place to live.

Using a traditional Virtual Machine (VM) is like buying a plot of land and building a house from scratch. You get total control over every single detail—the foundation, the framing, the plumbing—but you're also on the hook for all the maintenance, security, and upkeep. It's the ultimate in customization, but it comes with a ton of management work.

Containers, powered by tools like Docker and Kubernetes, are more like buying a high-quality prefab home. The main structure is already built and portable, so you can easily drop it onto different pieces of land (or move it between cloud providers). You still have to manage the "land" it sits on—the server or platform—but the actual setup is way faster and more standardized.

Serverless, on the other hand, is like renting a fully furnished, all-bills-paid apartment. You don’t think about the building’s foundation, the electricity bill, or fixing a leaky pipe. You just show up with your stuff (your code), and you only pay for the time you're actually inside using the space. It's the peak of convenience, but you give up control over the underlying infrastructure.

A Head-to-Head Comparison

Making the right call means looking at how these models really differ in day-to-day operations. The best fit usually comes down to your app's specific needs, your team's skillset, and your budget.

Here’s a straightforward breakdown to help you see the differences at a glance.

Serverless vs Containers vs Traditional Servers

| Attribute | Serverless (FaaS) | Containers (e.g., Kubernetes) | Traditional Servers (VMs/Bare Metal) |

|---|---|---|---|

| Management Overhead | None. The cloud provider handles everything from the OS to the runtime. You just bring the code. | Medium. You manage the container orchestration (the "fleet") but not the physical hardware. | High. You're responsible for the OS, patching, security, and all installed software. |

| Scaling | Automatic & Instant. Scales from zero to thousands of requests without you lifting a finger. | Automatic, but needs setup. You have to configure scaling rules and manage clusters. | Manual or Complex. Requires you to provision new servers or set up complicated auto-scaling groups. |

| Cost Structure | Pay-per-execution. You're billed only for the compute time you use, often down to the millisecond. | Pay-for-reserved-resources. You pay for the nodes in your cluster, even when they're sitting idle. | Pay-for-uptime. You pay for the server as long as it's running, no matter how much traffic it gets. |

| Developer Focus | Purely on code. Developers write functions and connect them to events. That's it. | Code and configuration. Devs manage container images and orchestration files (like YAML). | Code and infrastructure. Devs are often pulled into server setup, maintenance, and troubleshooting. |

This table really spells out the trade-offs. VMs give you a blank canvas, containers give you a portable and consistent environment, and serverless gives you a fully managed platform where you can just focus on your code.

Strategic Trade-Offs to Consider

Picking between these isn't just a technical debate; it's a strategic business decision that hits your team's speed, your operational agility, and your bottom line.

- When to Choose VMs: Go with virtual machines when you need deep, granular control over the operating system, have custom networking requirements, or are running legacy apps that weren't built for modern, cloud-native environments.

- When to Choose Containers: Containers are perfect for complex applications that need to be portable across different clouds or on-premise data centers. They're the standard for building intricate microservices where you need fine-grained control over how services talk to each other and scale.

- When to Choose Serverless: Serverless shines for event-driven, stateless apps with spiky or unpredictable traffic. It’s a fantastic fit for API backends, data processing jobs, and tasks that only run occasionally since you only pay when they're actually working.

The decision really boils down to a balance of control versus convenience. The more control you demand over the environment, the more management you have to take on. Serverless sits at the far end of the spectrum, offering maximum convenience by abstracting away almost all of the infrastructure headaches.

As you weigh these options, remember this choice is a core part of your entire technical strategy. For a deeper look at how to make these kinds of foundational decisions, our guide on how to choose a tech stack offers some valuable context.

This decision tree helps visualize the main fork in the road when you go serverless.

The key takeaway here is that "serverless" isn't just one thing. It's an approach where FaaS handles your custom code, and BaaS provides all the ready-made backend services you need to support it.

Weighing The Real-World Benefits And Drawbacks

Jumping into a new technology like serverless isn’t just about the cool tech—it’s about what it actually means for your budget, your team's sanity, and how fast you can get things done. Like any powerful tool, it comes with some game-changing upsides and a few practical headaches you need to know about before you commit.

Seeing both sides of the coin is the only way to figure out if it's the right move for your projects. For startups and small businesses right here in Omaha, the benefits can be a massive leg up, fundamentally changing how you build and pay for software.

The Clear Advantages Of Going Serverless

Honestly, one of the biggest draws to serverless is the way it completely flips the cost model on its head. You stop paying for servers to sit around waiting for something to do. Instead, you only pay for the exact compute time your code uses, right down to the millisecond. This pay-per-use model can save you a ton of money, especially if your app has spiky or unpredictable traffic.

But it's not just about the money. The operational perks are huge:

- Near-Infinite Scalability: Serverless platforms scale up and down for you automatically. Whether you have ten users or ten million, the cloud provider handles it all. No more late-night scrambles to add more servers.

- Accelerated Time-to-Market: Your developers get to stop worrying about provisioning servers and managing infrastructure. They can just focus on writing code that solves business problems, which means you can build, test, and ship new features a whole lot faster.

- Reduced Operational Overhead: Forget about patching operating systems, tweaking server configs, or guessing at capacity needs. All that maintenance work disappears, freeing up your engineering team to innovate instead of just keeping the lights on.

This financial model is a huge reason for its explosive growth. By 2023, serverless-related services already made up about 66% of all cloud infrastructure spending. The market is projected to keep growing at a 22-25% clip as more businesses realize how efficient it can be.

The Practical Drawbacks And Challenges

Of course, serverless isn't a silver bullet. It introduces its own set of trade-offs and new kinds of complexity that you have to be ready for. These challenges are totally manageable, but you'll have a much better time if you walk in with your eyes open.

The most common issue you'll hear about is the "cold start." Since your functions aren't running all the time, the first request after a quiet period might have a slight delay while the provider spins up a new instance. For an e-commerce checkout or a real-time API, that lag can be a deal-breaker, though there are ways to keep your functions "warm."

Vendor lock-in is another real strategic risk. When you build your app around a specific provider's services, like AWS Lambda and DynamoDB, moving to another cloud provider becomes a massive, expensive headache. Your code and architecture get tangled up in that one ecosystem.

Finally, the very nature of serverless makes it harder to debug and monitor. Instead of one big application log, you suddenly have logs scattered across hundreds of tiny, individual functions. This chaos demands new tools and a different mindset for troubleshooting. A strong security posture is also a must-have, which you can read more about in our guide on web application security best practices.

Here’s a quick look at how the pros and cons line up:

| Benefit | Corresponding Drawback |

|---|---|

| Lower Cost (Pay-Per-Use) | Unpredictable costs if usage spikes unexpectedly. |

| Automatic Scaling | Cold starts can introduce latency for initial requests. |

| Reduced Management | Increased risk of vendor lock-in to a specific cloud. |

| Faster Development | Debugging and monitoring distributed functions is more complex. |

Ultimately, getting a handle on serverless architecture means appreciating both its incredible potential and its built-in limitations. By weighing these pros and cons, you can make a smart call about where this powerful model fits into your tech stack.

Putting Serverless To Work With Real Examples

Okay, understanding the theory behind serverless is one thing, but seeing how it actually solves real-world problems is where it all clicks. Companies all over are using this model to handle everyday tasks way more efficiently. Looking at a few concrete examples really shows how the whole event-driven, pay-as-you-go nature of serverless creates actual business value.

These aren’t just fringe cases; we’re talking about core business functions that have been totally rethought without the need for servers that are always on and always costing you money. The result? Faster development, lower operating costs, and systems that can handle a sudden flood of users without breaking a sweat.

Let's dive into a few common scenarios where serverless really shines.

Automated Image And Video Processing

Imagine you run an e-commerce site or a social app where users are constantly uploading photos. In a traditional setup, you'd have a whole fleet of servers just sitting there, waiting to resize, watermark, or compress those files. With serverless, the whole process becomes incredibly lean.

Here’s how it works:

- The Trigger: A user uploads a new image or video to a cloud storage bucket (like Amazon S3).

- The Function: That upload event instantly and automatically triggers a serverless function.

- The Action: The function fires up, runs its code to create thumbnails, add a watermark, or convert a video, and then shuts down.

- The Benefit: You only pay for the few seconds of compute time it takes for each upload. The system can handle one file or one million simultaneous uploads, scaling automatically without you lifting a finger.

This is a textbook use case because it's completely event-driven, stateless, and deals with unpredictable demand—a perfect match for the serverless model.

Building Scalable APIs And Microservices

Serverless is a fantastic way to build the backend APIs that power modern web and mobile apps. Instead of creating one giant, monolithic application, you can build your backend as a collection of small, independent functions—this is the microservices approach everyone talks about.

Each function handles one specific API endpoint. For example:

getUserProfileprocessPaymentaddToCart

When a user’s phone or browser makes an API request, it triggers only the function it needs. That function does its job—maybe it grabs data from a database or calls another service—and sends back a response. This method keeps your development focused and lets different parts of your app scale on their own.

This granular approach means a sudden spike in users viewing profiles won't slow down your payment processing system. Each function scales independently, creating a far more resilient and cost-effective backend.

Real-Time Data Processing And ETL Jobs

Every business needs to move and transform data. Extract, Transform, and Load (ETL) jobs are the bread and butter of tasks like prepping data for a sales dashboard or syncing information between different systems.

A serverless architecture can automate these workflows beautifully. For example, you could schedule a function to run every hour. When it triggers, it could pull new sales data from a database, reformat it, calculate key metrics, and then load the clean data into a data warehouse for analysis.

This powerful, flexible approach is a huge reason the serverless market is exploding. The market is projected to jump from $15.3 billion in 2024 to over $64.9 billion by 2032. You can dig into the full analysis on serverless market trends for a deeper look. This growth really reflects the industry-wide shift toward more scalable, on-demand IT systems.

Your First Steps Into Serverless Development

Jumping into serverless might feel like a huge leap, but getting your feet wet is honestly more straightforward than you'd think. The whole journey really kicks off with one big decision that’ll steer the rest of your project: picking the right cloud provider.

The serverless world is pretty much run by three major players, each with a solid Function-as-a-Service (FaaS) platform. Your choice will probably come down to what your team already knows or which cloud ecosystem your business is already plugged into.

- AWS Lambda: As the OG in the space, Lambda is the most mature and feature-rich option out there. It’s woven into every corner of the AWS ecosystem, making it a go-to for anything from simple data processing jobs to the backbone of complex microservices.

- Azure Functions: A powerful choice from Microsoft, especially if your team is already comfortable in the .NET world. The tooling is excellent, and its triggers are both flexible and surprisingly powerful.

- Google Cloud Functions: Known for its clean, simple approach and killer integration with Google’s data and machine learning services. It’s a fantastic starting point for event-driven apps running on the GCP platform.

Adopting Key Best Practices Early

Once you’ve picked your provider, your success really depends on building the right habits from the get-go. Serverless isn't just a different way to host code; it's a different way to think about building applications. Nailing down a few core principles early on will save you a ton of headaches later.

First things first, use a serverless framework. Seriously. Tools like the Serverless Framework or AWS SAM (Serverless Application Model) take care of the tedious work of defining, deploying, and managing your functions and all their related cloud bits and pieces. They handle the messy configuration so you can just focus on writing code.

Another habit to get into is designing single-responsibility functions. Each function should do one thing, and only one thing, exceptionally well. This makes your code a thousand times easier to test, debug, and maintain. For instance, one function could handle user sign-ups, while a totally separate one processes profile picture uploads.

Think of your functions as specialized tools in a workshop. You wouldn't use a hammer to cut a piece of wood. Similarly, each serverless function should be designed for a single, specific purpose to ensure clarity and efficiency.

Finally, get your logging and monitoring set up from day one—don't treat it as an afterthought. When your application is spread across dozens of functions, figuring out what went wrong can feel like finding a needle in a haystack. A centralized logging solution gives you a single pane of glass to see what’s happening everywhere, which makes troubleshooting way faster.

And on that note, lock down your security by implementing least-privilege permissions for every single function. This just means a function should only have access to the absolute minimum resources it needs to do its job. It’s a simple concept that dramatically reduces your security risk.

Common Questions About Serverless Architecture

When teams first start kicking the tires on serverless, the same few questions always seem to pop up.## Common Questions About Serverless Architecture

When teams first start kicking the tires on serverless, the same few questions always seem to pop up. Getting straight answers is the only way to really wrap your head around the trade-offs and figure out if it's the right move for your project.

The first question, without fail, is: "Wait, is it really 'server-less'?" The name throws everyone off. The short answer is no, of course there are still servers. The big difference is they’re not your problem anymore. The cloud provider takes care of everything—provisioning, patching, scaling—so your team never has to think about the underlying hardware.

When To Avoid Using Serverless

Another big one is figuring out when serverless is actually a bad idea. It’s powerful, but it's no silver bullet. It's usually the wrong tool for the job if you have long-running, stateful tasks. Think about a process that needs to chug along for hours, like encoding a full-length movie. A serverless function isn't built for that—it's designed for quick, stateless bursts of work, and trying to force it would get inefficient and expensive fast.

Likewise, applications that need rock-solid, predictable performance with zero latency might run into issues with cold starts.

A cold start is that small delay you see the very first time a function is called after it’s been sitting idle. There are ways to minimize it, but it’s a fundamental trade-off to weigh for anything that needs to be hyper-responsive.

Key Tools for Serverless Management

Finally, developers always want to know what tools they'll be using. Juggling hundreds of individual functions by hand sounds like a nightmare, and it would be, but several frameworks have emerged to make it surprisingly manageable. The most popular tools that help tame serverless applications include:

- The Serverless Framework: This is a crowd favorite because it’s cloud-agnostic. It gives you a clean way to deploy and manage your apps across AWS, Azure, and Google Cloud.

- AWS Serverless Application Model (SAM): If you're all-in on AWS, SAM is an open-source framework that makes defining your serverless resources much more straightforward.

- Terraform: While it's a much broader infrastructure-as-code tool, tons of teams use Terraform to manage their serverless pieces right alongside the rest of their cloud infrastructure, keeping everything in one place.

These frameworks handle all the tedious configuration and deployment work, freeing up your team to just focus on writing code.

Ready to build a scalable, high-performance web application without the infrastructure headaches? The team at Up North Media specializes in custom serverless solutions that drive growth for Omaha businesses. Schedule your free consultation today!